In a shocking turn for quantum supremacy claims, a team of researchers managed to simulate Google’s 53-qubit quantum computer using 1,432 powerful GPUs, raising questions about the true boundaries between quantum and classical computing. This achievement doesn’t undermine the value of quantum computing — but it does reshape the conversation around what classical hardware is still capable of.

Let’s unpack how this monumental computational feat was achieved, why it matters, and what it means for the future of quantum supremacy.

Background: Google’s Quantum Supremacy Claim

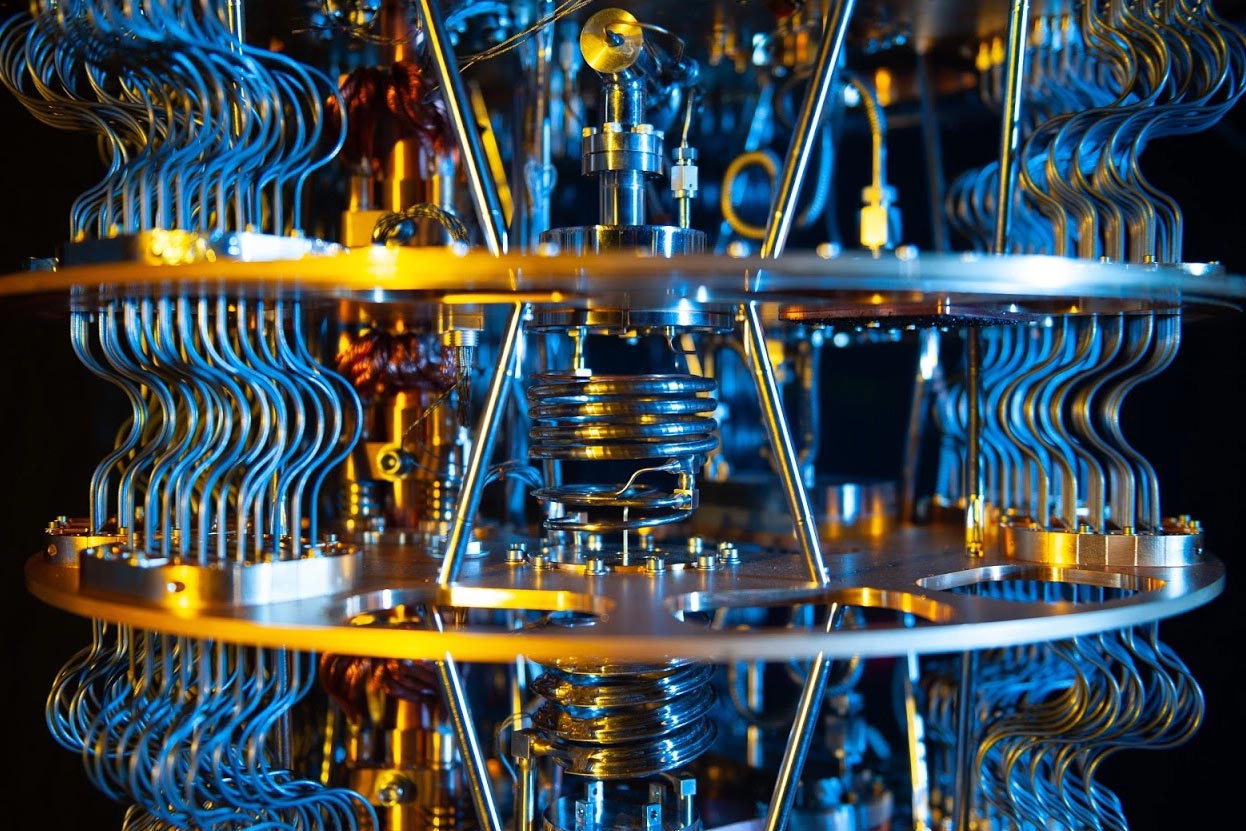

In 2019, Google’s quantum team stunned the world by announcing it had achieved quantum supremacy — a point where a quantum computer performs a task beyond the practical capabilities of classical computers. The device, named Sycamore, was a 53-qubit superconducting quantum processor that reportedly solved a random sampling problem in just 200 seconds. Google claimed the world’s most powerful supercomputers would need 10,000 years to perform the same task.

Quantum supremacy, however, doesn’t imply practical usefulness — it simply refers to a point where quantum machines outperform classical ones on any task, however specific or abstract.

But then, something unexpected happened.

Enter the Challenge: Classical Supercomputing Fights Back

Fast-forward to a few years later. A research team — leveraging the power of 1,432 high-performance GPUs — replicated the same random circuit sampling that Google’s Sycamore quantum computer completed. And it didn’t take them 10,000 years. Instead, they did it in a few hours to a few days, depending on the parameters.

This counter-effort, using a classical supercomputer, redefined what is computationally possible with optimized algorithms and large-scale parallel processing.

So how did they do it?

The Power Behind 1,432 GPUs: How the Simulation Worked

At the heart of this success was a combination of smart optimization techniques, distributed computing frameworks, and powerful hardware. Here’s what made it work:

1. Tensor Network Methods

Rather than brute-forcing the entire quantum circuit’s state space (which would have required 2^53 complex amplitudes), the team used tensor networks — mathematical structures that simplify quantum circuits into a web of smaller parts. This drastically reduced memory and time requirements

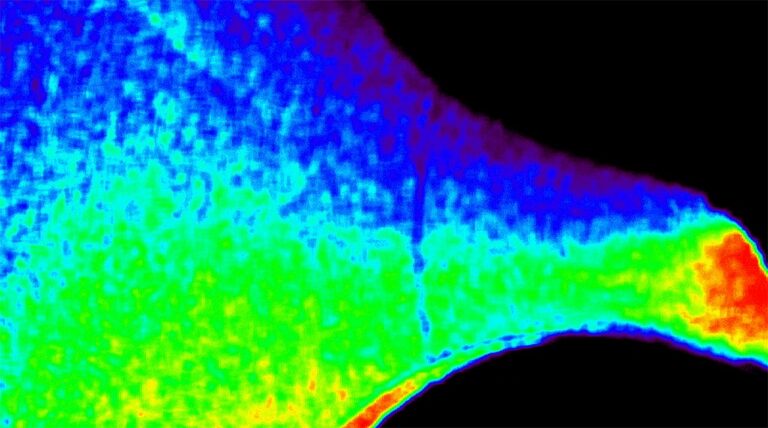

2. High-Bandwidth GPU Clusters

The researchers deployed 1,432 NVIDIA A100 GPUs (among the most advanced in the world) with high interconnect bandwidth and parallel computation support. Each GPU handled specific parts of the circuit, all coordinated via optimized communication protocols.

3. Smart Circuit Partitioning

By slicing the quantum circuit cleverly into smaller sub-circuits and minimizing the number of quantum gates between them, the team was able to simulate the system more efficiently. Circuit partitioning also helped reduce inter-node data transfer bottlenecks.

4. FP64 Precision and Memory Optimization

High double-precision floating-point (FP64) performance and careful memory handling ensured that the simulation stayed accurate and efficient even with millions of complex-number calculations running simultaneously.

What This Means for Quantum Supremacy

The classical simulation of a 53-qubit system — previously thought impossible — doesn’t negate Google’s milestone but rather raises the bar. Here’s why this is important:

Quantum Advantage Isn’t Linear: As quantum processors scale, simulating them classically becomes exponentially harder. But classical methods are also improving with better hardware and smarter algorithms.

Supremacy vs. Utility: Google’s experiment was a demonstration of potential, not practicality. Meanwhile, classical computing continues to dominate real-world applications — from AI to simulations — because of decades of optimization and infrastructure.

Benchmark Reset: Now that a 53-qubit system has been simulated, future claims of supremacy will require even more advanced quantum systems — possibly beyond 70-80 qubits with high fidelity — to truly outpace classical contenders.

Implications for the Future of Computing

1. The Rise of Hybrid Systems

Rather than thinking of classical and quantum computing as rivals, this achievement encourages the rise of hybrid computing models, where quantum processors handle specific tasks and classical machines handle optimization, error correction, and orchestration

2. Need for Quantum Error Correction

Google’s Sycamore still suffers from noise and decoherence. Until quantum error correction becomes viable and scalable, classical supercomputers will continue to catch up and simulate smaller quantum systems with accuracy.

3. Increased Investment in Classical Algorithms

This accomplishment underscores the importance of continually optimizing classical simulation algorithms. Tensor networks, variational methods, and Monte Carlo simulations are becoming more potent in bridging the gap.

4. Hardware Acceleration is Still King

GPUs and tensor cores — the backbone of AI — are now proving vital in simulating quantum systems. As GPU performance improves (e.g., NVIDIA’s H100 and future Blackwell GPUs), classical simulations will only get more powerful.

Conclusion: Quantum Leap or Classical Comeback?

The successful simulation of Google’s 53-qubit Sycamore processor by 1,432 GPUs is a historic benchmark in classical computing, reminding us not to underestimate the ever-expanding power of traditional architectures. While quantum computing remains the future, classical computing is not backing down — it’s evolving.

Quantum supremacy is not a single finish line. It’s a moving target — and this milestone only proves that the race is far from over.

+ There are no comments

Add yours